Hardware of LEO III

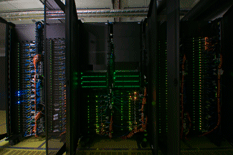

Cluster

The whole cluster consists of three air cooled iDataPlex racks:

-

Front view - closed doors Front view - opened doors

- The middle one of the three racks contains the main infrastructure components for network and storage, as well as the three GPU nodes:

Front views

Compute nodes

- 162 compute nodes, each of which comprises:

- 2 Intel six-core Xeon X5650 cpus(12 cores running on 2.7 GHz)

- 24 GB DDR3 ECC RAM (2 GB per core)

- Single-Port 4x QDR Infiniband PCIe 2.0 HCA

- 250 GB SATA2 hard disk

Closeup view of some rack mounted nodes

- 3 GPU nodes, additionally equipped with:

- 2 NVidia Tesla M2090 graphics cards

- an extra 24 GB of main memory

- One master node for login and job submission

- 2 Intel six-core Xeon X5650 cpus

- 24 GB DDR3 RAM

- Raid controller (configured with RAID 1)

- 2 146GB 15k 3.5'' Hot-Swap SAS hard disks

- Single-Port 4x QDR Infiniband PCIe 2.0 HCA

- Dual-port SFP+ 10GbE PCIe 2.0 Adapter

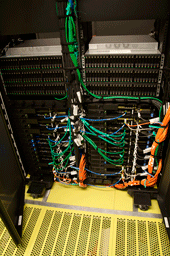

Network devices

- Infiniband

- 3 Mellanox IS5030 36-port managed QDR spine switches

- 7 Mellanox IS5030 36-port managed QDR leaf switches

- Gigabit-Ethernet

- 3 48-Port 1 Gb Ethernet Switches

- 2 SFB Modules (fiber optic uplinks to university LAN)

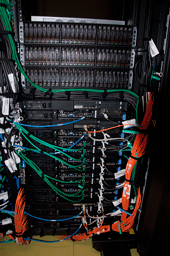

Storage system

The storage system offers a total of 86 TB (brutto) and consists of:

- two disk systems:

- 2 FC-SAS, dual storage controllers (2 controller enclosures)

- 4 EXP3524 expansion units and Environment Service Modules (4 SAS enclosures)

- 144 600GB 10k 2.5'' SAS disks

Front view

- four redundant file servers each with the following configuration:

- 1 Intel Xeon Quad-Core E5630 CPU

- 32 GB DDR3 RAM

- Raid controller (configured with RAID 1)

- 4 146GB 15k 2.5'' Hot-Swap SAS hard disks

- Single-Port 4x QDR Infiniband PCIe 2.0 HCA

Photos: Wolfgang Kapferer