By Viktor Daropoulos

1. Introduction

In this post we will present the concept of variational networks (VNs) by [3], which are residual neural networks specifically trained for image denoising and image deblurring. Inspired by variational and optimization theory, these networks bridge the gap between traditional variational methods and modern machine learning theory and show great potential for image reconstruction tasks as they outperform traditional variational techniques. Due to their incremental structure, they allow for easy parallelization whereas convex or non-convex models are trained using a single incremental proximal algorithm.

2. Variational Networks

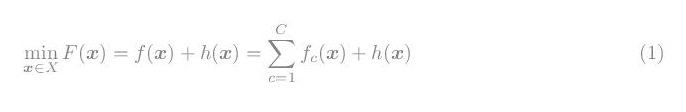

Variational networks (VNs) are motivated by the incremental proximal gradient method and, under some assumptions, they share the same structure as residual networks. The incremental proximal gradient method becomes very useful when we have to deal with additive cost functions, which is very common for large scale machine learning applications. Specifically, this method is applied when the cost function has the form:

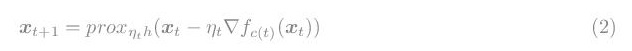

f (x) aggregates the smooth components, is convex, and lower semicontinuous whereas h(x) is again convex, lower semicontinuous, but possibly non-smooth. The minimizer is approached in an iterative manner by:

where prox(•) denotes the proximal mapping, namely:

This method is guaranteed to converge for diminishing step size ηt with

for random or cyclic component selection c(t), whereas approximate convergence is additionally proven for constant step size [1]. Approximate convergence is also possible, even for non-convex component functions fc(x), using the NIPS framework [2]. However, it should be noted that all component functions fc(x) should be Lipschitz continuous. A variational unit (VU) is nothing more that just one step of the incremental proximal gradient method, where we assume that the component functions are selected in a cyclic fashion, namely c(t) = mod(t, C) + 1. A variational network is composed by just stacking variational units one after another as it is denoted on Figure 1.

Revisiting the structure of the residual unit, we can see that it can be described by the iteration (also visualised on Figure 2):

where:

Figure 1: Left: VU, Right: VN [3]

Figure 2: Residual Unit [3]

By comparing the structure of the residual unit and the variational unit we can see that if:

then gt(xt) corresponds to−∇fc(t)(xt). Consequently, both residual and variational units share the same computational structure. Thus, since residual networks avoid the degradation problem, we can also expect the same for VNs. Furthermore, we also benefit from the rich theory of incremental methods such as convergence, convex optimization theory [3], and parallelism.

3. Image Denoising

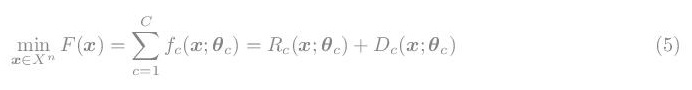

Having the definition of a variational unit/network, we can apply it to the case of image denoising. In those cases we are asked to deal with a variational model of the form:

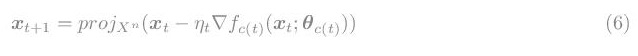

where Rc(x; θc) corresponds to regularization, and Dc(x; θc) models the data term. Both terms are parameterized by θc which represents the vector of parameters that the neural network needs to learn for each component during the training phase. After the previous definition of the variational unit in this scenario of image reconstruction, we end up with the iteration:

Proximal mapping, in this case, turns into a projection to Xn which is the set of non-negative vectors (non-negativity is meant pointwise) since we are dealing with images. Figure 3 corresponds to an energy minimization denoising scheme.

Figure 3: Convex and Non-convex N-VN [3]