Grid Computing

Grid Computing is

"The sharing that we are concerned with is not

primarily file exchange but rather direct access to

computers, software, data, and other resources, as is

required by a range of collaborative problem-solving

and resource-brokering strategies emerging in industry,

science, and engineering. This sharing is, necessarily,

highly controlled, with resource providers and

consumers defining clearly and carefully just what is

shared, who is allowed to share, and the conditions

under which sharing occurs. A set of individuals and/or

institutions defined by such sharing rules form what we

call a virtual organization."

(The Anatomy of the Grid, Intl J. Supercomputer

Applications, 2001; 15 (3), Ian Foster, Carl

Kesselman, Steven Tuecke)

HEP analysis

Analysis of High Energy Physics Data

The analysis of the data of HEP (high energy physics) experiments requires a sophisticated chain of data processing and data reduction. The data recorded by the experiment has to follow a chain of reconstruction and analysis programs before they are examined by the physicist. The stages can be characterized by their data format.

In the first stage raw data is

delivered by the data acquisition system of the

experiment. The data consists out of the raw

measurements of the detectors. These can be channel

numbers, time measurements,charge depositions and other

signals. Due to the large number of channels one can

expect a single event to have a size in the order of

several hundreds of kilobytes to several megabytes.

Integrated over the time of data taking during a year

the overall data size is expected to be in the order of

a petabyte. The data has to be recorded in a safe way

on permanent storage.

The data will then be processed in a complicated

reconstruction step. Raw numbers are converted into

physical parameters as space coordinates or calibrated

energy deposits. A further pattern recognition step is

translating these numbers in parameters of observed

particles, characterizing them by their moments or

their energy. The output of this processing step is

called reconstructed data. Its size is

typically in the same order as the raw data. Data in

that format has to be distributed to a limited number

of users for detector studies and some specific

analysis.

To simplify the analysis procedure only the most

relevant quantities are stored in separate streams.

These data is typically referred AOD

(Analysis Object Data). The size of the events is

significantly reduced and this format provides the

input for physics analysis. It is expected that the

amount of AOD for a year of data taking is in the order

of 100 TB.

In the process of the analysis of the data physicists

will often perform a further data reduction. Sometimes

skims for interesting events are performed. Often the

physicist define their own ad hoc data format. In ATLAS

these datasets are known as DPD

(Derived Physics Data), in CMS as PAT

Skims (Physics Analysis Toolkit).

Of special consideration are simulated event data, the

Monte Carlo events. In the process of

the analysis it is required to study carefully the

sensitivity and coverage of the detectors. The basis of

such studies are careful and detailed simulation of

event simulations.

Tier structure

The Organization of the Global Infrastructure

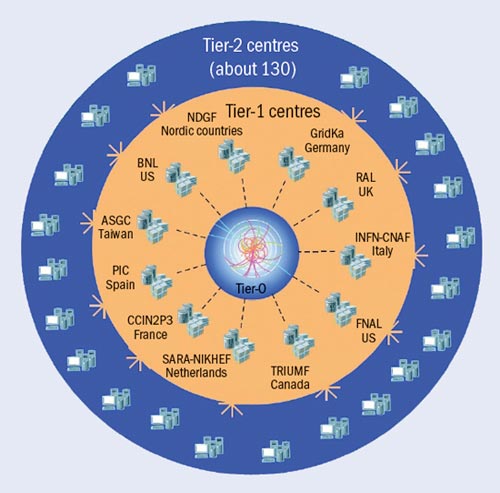

Already at an early stage it was recognized that analysis of data on the foreseen scale would require careful planning and organization. Different computing centres would have to have different roles according to their resources and their geographical location. The MONARC working group (Models Of Networked Analysis at Regional Centres) defined a tiered structure that groups computing centres according to their characteristics.

The Tier-0 is the computing centre

in CERN. As the site is hosting the experiments, its

computing centre has a special role. It is required

that all original data are recorded to permanent

storage. Then initial processing of the data has to be

performed on the site to provide rapid feedback to the

operation. The data is then sent to other computing

centres for analysis. It´s main role is to provide

analysis capacity for local users. Computing resources

for simulation can be provided to the experiments on an

opportunistic basis. Other resources are usually

reserved for local users.

A number of large computing centres (eleven in total)

take the role of Tier-1 centres for

the various computing centres. They receive data

directly from CERN and provide additional permanent

storage. The computing centres provide also the

computing resources for reprocessing of the data,

required at a later stage in the analysis. As major

facilities they have a special role also in providing

reliable services to the community as databases and

catalogues. The centres are also responsible for the

collection of simulated events produced at higher Tier

centres.

A large number of sites take the responsibility as

Tier-2 centres. Their role is to

provide the bulk of computing resources for simulation

and analysis. Typically they are associated with large

disk storage to provide temporary storage data that is

required for analysis. In total about hundred such

centres have been identified.

The model is complemented by a large number of smaller

computing centres at various universities and

laboratories, the Tier-3 centres.

Their main role is to provide analysis capacity for

local users. Computing resources for simulation can be

provided to the experiments on an opportunistic basis.

Other resources are usually reserved for local users.